Introduction to GPUs:

A GPU, or Graphics Processing Unit, is a specialized computer chip that is designed to handle tasks related to visual data processing and rendering. The main function of a GPU is to generate images and video, but they are also used for other computationally intensive tasks such as scientific simulations and machine learning.

GPUs differ from CPUs (Central Processing Units) in that they are designed to perform many simple and repetitive tasks in parallel. This is in contrast to CPUs, which are designed to handle a variety of tasks, but perform them in a sequential manner. GPUs are optimized for parallel processing, which makes them well suited for tasks that require a lot of data manipulation, such as rendering 3D graphics or running simulations.

The history of GPUs can be traced back to the late 1970s, when graphics chips were first introduced in computer systems. Over time, GPUs have become increasingly powerful and versatile, and have grown to become a critical component of many computing systems. In recent years, the demand for GPUs has increased significantly, driven by the growth of computer gaming and the rise of artificial intelligence and machine learning.

As an example, let’s consider a computer game that uses 3D graphics. The game generates a large number of objects and images, which need to be rendered in real-time as the player moves through the game world. A GPU is responsible for rendering these images, making it possible for the game to run smoothly and respond quickly to the player’s actions.

Understanding the Architecture of GPUs:

A GPU is made up of several key components, including the GPU core, memory, and buses.

The GPU core is the heart of the GPU, and is responsible for performing the calculations that generate images and video. A modern GPU can contain hundreds or even thousands of individual cores, each capable of performing simple calculations in parallel. This makes GPUs well-suited for handling large amounts of data simultaneously.

GPU memory, also known as VRAM (Video Random Access Memory), is used to store the data that the GPU cores process. VRAM is faster than the main system memory (RAM) and provides the GPU with the data it needs to perform its tasks.

The buses connecting the GPU to the rest of the computer system are also an important component of the GPU architecture. These buses transfer data between the GPU, VRAM, and the CPU, enabling the GPU to receive data to process and send the processed data back to the system.

GPUs are designed to handle parallel processing, which means that they are capable of performing many simple tasks at the same time. This is in contrast to CPUs, which are designed to handle a variety of tasks, but perform them in a sequential manner. The high core count and specialized architecture of GPUs allow them to perform many calculations simultaneously, making them well suited for tasks that require a lot of data manipulation, such as rendering 3D graphics or running simulations.

There are two main types of GPUs: consumer and professional. Consumer GPUs are designed for use in gaming and home computers, while professional GPUs are designed for use in high-performance computing systems, such as supercomputers and workstations. Professional GPUs are typically more expensive and have higher performance capabilities than consumer GPUs.

As an example, consider a scientist running a simulation to model the behavior of a complex system, such as weather patterns. The simulation generates a large amount of data, which the GPU must process to produce the final simulation results. A professional GPU, with its high core count and specialized architecture, is better equipped to handle this type of computationally intensive task than a consumer GPU.

The Role of GPUs in Computing

Gaming and 3D Graphics:

One of the main uses of GPUs is in the gaming industry. Graphics-intensive games require the processing of large amounts of visual data in real-time, and GPUs are designed to handle this type of task. A GPU can render the images, animations, and special effects in a game, making it possible for the game to run smoothly and respond quickly to the player’s actions. This allows for an immersive gaming experience, with high-quality graphics and fast frame rates.

Scientific and Financial Modeling:

GPUs are also used in scientific and financial modeling, where they are employed to perform complex simulations and data analysis. For example, in weather forecasting, GPUs can be used to run simulations of atmospheric conditions, providing a more accurate prediction of weather patterns. In the financial industry, GPUs can be used to perform risk assessments and to model financial markets, helping organizations make informed investment decisions.

Machine Learning and Artificial Intelligence:

In recent years, GPUs have become increasingly important for machine learning and artificial intelligence. Machine learning algorithms require the processing of large amounts of data, and GPUs are well suited for this type of task due to their ability to perform parallel processing. For example, a deep learning neural network can be trained on a large dataset using a GPU, which can significantly speed up the training process.

In artificial intelligence, GPUs are used for tasks such as image and speech recognition, where they are trained to recognize patterns in large amounts of data. For example, a GPU can be used to analyze images and identify objects or people in the pictures. This type of analysis can be used in a wide range of applications, such as security systems, medical imaging, and autonomous vehicles.

The Advancements in GPU Technology

Impact of New Technologies on GPU Performance:

Recent advancements in GPU technology have had a significant impact on performance. One example of this is the introduction of ray tracing technology, which provides more realistic and accurate lighting and shadow effects in computer graphics. Ray tracing allows for a greater degree of realism in games and other graphics-intensive applications, making them look more lifelike.

Another example is DLSS (Deep Learning Super Sampling), a technology developed by NVIDIA that uses deep learning algorithms to improve image quality and performance in games. DLSS works by upscaling images to a higher resolution, and then using deep learning algorithms to enhance the image quality, resulting in sharper, clearer images that run smoothly even at high resolutions.

Development of New Generations of GPUs:

The GPU industry is constantly evolving, with new generations of GPUs being developed and released regularly. For example, the recent launch of NVIDIA’s Ampere architecture and AMD’s RDNA2 architecture have brought significant performance improvements over their predecessors. The Ampere architecture, for example, features a more efficient design that allows for higher performance at lower power consumption, making it well suited for demanding applications such as gaming and machine learning.

Trend of Multi-GPU Systems:

The trend of multi-GPU systems is also driving the development of new GPU technology. Multi-GPU systems use multiple GPUs in a single system to increase performance and provide more computational power. This allows for even more demanding applications, such as large-scale scientific simulations and high-end gaming, to be run more effectively.

The Future of GPU Technology:

The future of GPU technology looks promising, with new innovations and advancements being made all the time. As demand for more computational power continues to grow, the GPU industry will continue to evolve and develop new technologies to meet the needs of consumers and businesses. This could include things like increased efficiency and lower power consumption, improved performance and capabilities, and new technologies such as quantum computing and neuromorphic computing.

Choosing the Right GPU for Your Needs

Factors to Consider When Choosing a GPU:

When choosing a GPU, there are several important factors to consider, including budget, performance requirements, and compatibility. For example, if you’re on a tight budget, you may need to prioritize affordability over performance. On the other hand, if you’re using your GPU for demanding applications such as gaming, video editing, or machine learning, you’ll need to focus on performance over cost.

In terms of compatibility, it’s important to make sure that the GPU you choose is compatible with your computer’s hardware and software. You’ll also need to consider the type of connector your GPU uses, as well as the size of the GPU and whether it will fit in your computer’s case.

Choosing the Right GPU for Specific Applications:

Different applications require different types of GPUs, and it’s important to choose the right GPU for the specific application you have in mind. For example, if you’re using your GPU for gaming, you’ll want to choose a GPU that provides high frame rates and fast refresh rates. On the other hand, if you’re using your GPU for video editing, you’ll want to choose a GPU that provides high levels of memory and processing power.

For machine learning, you’ll want to choose a GPU that provides high levels of memory and processing power, as well as the ability to perform matrix operations quickly. The GPU should also support popular machine learning frameworks, such as TensorFlow and PyTorch.

Recommendations for the Best GPUs on the Market:

When it comes to choosing the best GPUs on the market, it really depends on your specific needs and budget. However, some of the best GPUs currently available include the NVIDIA GeForce RTX 30 series, the AMD Radeon RX 6000 series, and the NVIDIA Titan RTX. These GPUs provide excellent performance, high memory capacity, and support for the latest technologies, making them ideal for a wide range of applications.

The Importance of GPUs in the World of Computing:

GPUs have become increasingly important in the world of computing over the years, playing a critical role in a wide range of applications, including gaming, video editing, machine learning, and artificial intelligence. The high levels of memory and processing power provided by GPUs allow for complex tasks to be performed quickly and efficiently, making them essential for many modern computing needs.

The Future of GPU Technology and its Impact on Society:

The future of GPU technology looks promising, with continued advancements in areas such as ray tracing, deep learning, and virtual reality. These advancements will likely have a significant impact on society, enabling new applications and capabilities in areas such as gaming, video production, and scientific research.

As GPUs become more powerful and more widely adopted, they will play an increasingly important role in shaping the future of computing and the world around us. Whether it’s through the creation of more realistic and immersive gaming experiences, the acceleration of scientific breakthroughs, or the development of new AI-powered technologies, the future of GPU technology looks bright.

In conclusion, GPUs are an essential component of modern computing, playing a critical role in many important applications and shaping the future of the technology industry. As technology continues to advance, GPUs will play an increasingly important role in our daily lives, and it will be exciting to see how they continue to impact society in the years to come.

CUDA(Compute Unified Device Architecture)

CUDA (Compute Unified Device Architecture) is a parallel computing platform and API developed by NVIDIA. It allows software developers to harness the power of NVIDIA GPUs for general-purpose computations, providing a straightforward and efficient way to perform complex computations on large amounts of data.

The CUDA platform consists of a software environment for writing and executing parallel programs, as well as a hardware architecture for processing data in parallel. The software environment provides a set of libraries, tools, and programming models for developers to use, while the hardware architecture provides the underlying parallel processing capabilities.

With CUDA, developers can write programs in a high-level programming language, such as C or Python, and then use the CUDA libraries and tools to execute them on an NVIDIA GPU. The GPU performs the computations in parallel, dividing the work across many cores in order to achieve significant performance gains over traditional CPU-based computations.

One of the key benefits of CUDA is that it provides a high-level, easy-to-use programming model that abstracts away many of the complexities of parallel programming. This makes it possible for developers with limited parallel programming experience to take advantage of the parallel processing capabilities of NVIDIA GPUs.

CUDA is widely used in a variety of fields, including scientific research, finance, and entertainment. For example, in scientific research, CUDA can be used to perform complex simulations and modeling tasks, such as molecular dynamics or climate modeling. In finance, CUDA can be used to perform high-speed financial calculations and risk analysis. In the entertainment industry, CUDA can be used for tasks such as video editing and special effects.

CUDA (Compute Unified Device Architecture) is a parallel computing platform and API developed by NVIDIA for programming their GPUs. It provides a way for developers to write programs that take advantage of the massive parallel processing power of NVIDIA GPUs to perform complex computations quickly.

Compiler and toolkit for programming NVIDIA GPUs:

CUDA is a compiler and toolkit that provides developers with the tools and libraries needed to program NVIDIA GPUs. It includes a C-based language for writing GPU kernels, as well as libraries for data parallelism, linear algebra, and other common tasks.

CUDA API extends the C programming language: The CUDA API extends the C programming language to allow for the creation and execution of GPU kernels, which are functions that run in parallel on many GPU cores. This makes it easier for developers to write programs that take advantage of the parallel processing capabilities of GPUs.

Runs on thousands of threads: CUDA is designed to handle large amounts of data and perform complex computations quickly by running the computation on thousands of threads in parallel. Each thread performs a small part of the overall computation, and the results are combined to produce the final result.

It is an scalable model: CUDA is designed to be scalable, which means that it can take advantage of multiple GPUs in a single system, as well as multiple GPU systems connected over a network. This allows developers to write programs that can scale to handle increasingly large amounts of data as needed.

Express parallelism: The main objective of CUDA is to make it easier for developers to express parallelism in their programs. This means that the developer can write code that takes advantage of the parallel processing capabilities of the GPU without having to deal with the complexities of parallel programming.

Give a high level abstraction from hardware: Another objective of CUDA is to provide a high-level abstraction from the underlying hardware, making it easier for developers to write programs that can run on different GPU models and generations without having to deal with the complexities of the underlying hardware.

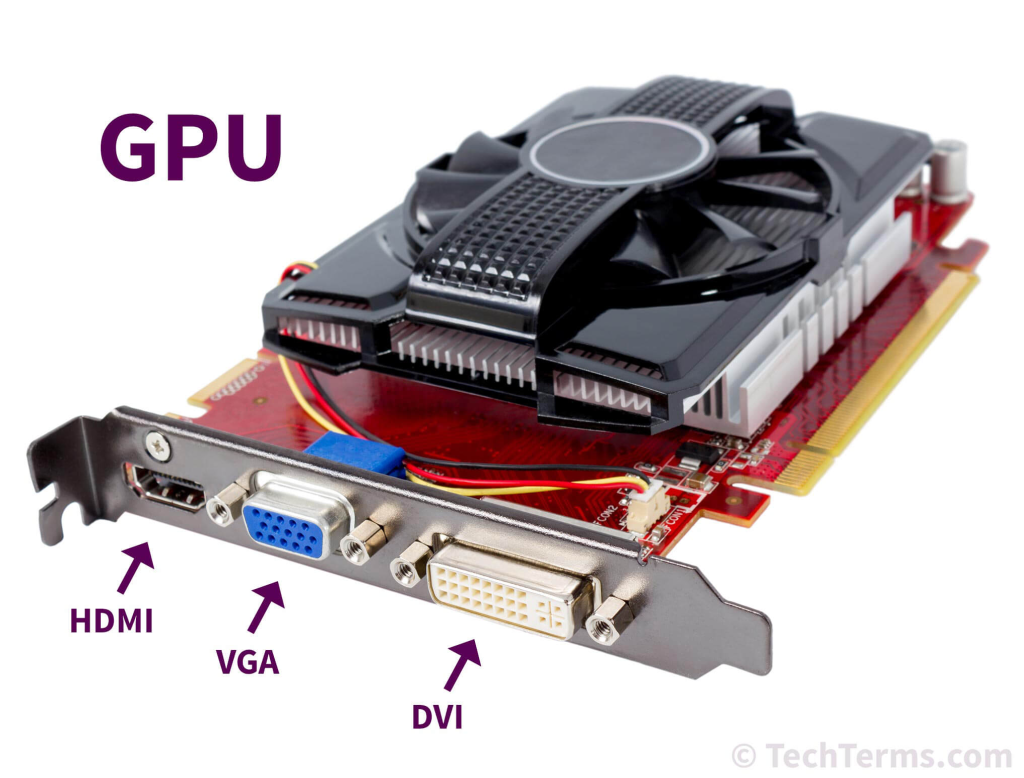

How a GPU looks like?

A GPU, or Graphics Processing Unit, is a specialized piece of hardware designed for performing complex graphical and computational tasks. Most computers today come with a GPU, as they are essential for handling the intensive graphics and computational demands of modern software.

Most computers have one: GPUs are a common feature of modern computers and can be found in laptops, desktops, workstations, and even some smartphones. The GPU is responsible for rendering images and video, as well as handling other computational tasks that are beyond the capabilities of the CPU.

Billions of transistors: A GPU is a highly complex piece of hardware, containing billions of transistors. These transistors are responsible for executing the instructions that the GPU receives from the computer, performing tasks such as rendering images and video, as well as handling other computational tasks.

Computing: A GPU is designed for high-performance computing and is capable of executing billions of calculations per second. In terms of single-precision floating-point performance, a GPU can achieve up to 1 Teraflop, or one trillion floating-point operations per second. For double-precision floating-point operations, a GPU can perform around 100 Gflops, or 100 billion floating-point operations per second.

A heater for winter time: A GPU can generate a significant amount of heat, which can be felt when using the computer. This heat is a result of the intense computational tasks that the GPU is performing and can be a useful source of warmth in cold weather.

Supercomputer for the masses: With their high-performance computing capabilities, GPUs have made supercomputing-level capabilities accessible to a much wider audience. This has enabled researchers, scientists, and engineers to perform complex computations that were previously only possible on large, specialized supercomputers.

In terms of physical appearance, a GPU is typically a small circuit board that is installed in the computer’s motherboard. The GPU contains several large chips, as well as numerous smaller components and cooling elements, such as fans or heat sinks. The size and complexity of the GPU can vary depending on the specific model and intended use.

CUDA language is vendor dependent?

CUDA language is vendor dependent: CUDA is a proprietary API developed by NVIDIA and is specific to NVIDIA GPUs. This means that programs written in CUDA will only run on NVIDIA GPUs and will not work on GPUs from other vendors.

OpenCL is going to become an industry standard: OpenCL (Open Computing Language) is an open standard for programming heterogeneous parallel computing systems, which includes CPUs, GPUs, and other types of processors. Some people believe that OpenCL will become the industry standard for parallel computing, as it provides a more open and flexible platform for programming parallel systems.

OpenCL is a low level specification, more complex to program with than CUDA C: OpenCL is designed to be a low-level specification that provides a lot of control and flexibility to the programmer. This makes it more complex to program with than CUDA C, which provides a higher level of abstraction and is easier to program with.

CUDA C is more mature and currently makes more sense: CUDA has been around for a long time and has a large community of users and developers. This has resulted in a large number of libraries, tools, and resources being developed for CUDA. For these reasons, CUDA C is considered to be more mature and to make more sense for many developers, especially those who are just getting started with parallel programming.

Porting CUDA to OpenCL should be easy in the future: Although OpenCL and CUDA have some differences, the underlying concepts of parallel computing are the same. This means that it should be relatively easy to port code written in CUDA to OpenCL in the future.

Here’s a simple example of how you could perform a vector addition in CUDA C:

__global__ void vectorAdd(float *A, float *B, float *C, int numElements) {

int i = blockIdx.x * blockDim.x + threadIdx.x;

if (i < numElements) {

C[i] = A[i] + B[i];

}

}

int main() {

// Allocate memory on the GPU

float *A, *B, *C;

cudaMalloc((void **)&A, numElements * sizeof(float));

cudaMalloc((void **)&B, numElements * sizeof(float));

cudaMalloc((void **)&C, numElements * sizeof(float));

// Copy data from host to device

cudaMemcpy(A, hostA, numElements * sizeof(float), cudaMemcpyHostToDevice);

cudaMemcpy(B, hostB, numElements * sizeof(float), cudaMemcpyHostToDevice);

// Launch the vectorAdd kernel on the GPU

vectorAdd<<<numBlocks, blockSize>>>(A, B, C, numElements);

// Copy data from device to host

cudaMemcpy(hostC, C, numElements * sizeof(float), cudaMemcpyDeviceToHost);

// Free memory on the GPU

cudaFree(A);

cudaFree(B);

cudaFree(C);

return 0;

}GPU Computing features

Fast GPU cycle: NVIDIA releases new GPU hardware every 18 months or so, providing developers with access to increasingly powerful and efficient GPUs. This allows developers to take advantage of new hardware features and performance improvements.

Requires special programming but similar to C: GPU programming is different from traditional CPU programming, but the CUDA programming language is similar to C and easy to learn for those familiar with C.

CUDA code is forward compatible with future hardware: CUDA code written for a specific GPU model can run on future hardware without modification, making it easier to maintain and scale GPU applications over time.

Cheap and available hardware: GPUs for GPU computing can range in price from £200 to £1000, making them relatively affordable for many developers and organizations. Additionally, GPUs are widely available from many suppliers, making it easy to obtain the hardware needed for GPU computing.

Number crunching: One GPU can perform over a teraflop of computation, which is equivalent to a small cluster of CPU-based computers. This makes GPUs well suited for applications that require a lot of computational power, such as scientific simulations, machine learning, and data analytics.

Small factor of the GPU: GPU computing requires a relatively small amount of hardware compared to CPU-based computing. This means that developers can take advantage of the power of GPUs without having to invest in a large amount of hardware.

Power and cooling: When using GPUs for GPU computing, it’s important to consider the power and cooling requirements of the hardware. GPUs consume a significant amount of power and generate a lot of heat, so it’s important to have a system with adequate power and cooling to ensure stable operation.

Here’s a simple example of how you could use CUDA to perform a simple matrix multiplication on the GPU:

#include <iostream>

#include <cuda_runtime.h>

// Define the size of the matrix

#define numRows 1024

#define numCols 1024

// GPU kernel to perform matrix multiplication

__global__ void matrixMult(float *A, float *B, float *C, int numRows, int numCols) {

int row = blockIdx.y * blockDim.y + threadIdx.y;

int col = blockIdx.x * blockDim.x + threadIdx.x;

if (row < numRows && col < numCols) {

float result = 0;

for (int i = 0; i < numCols; i++) {

result += A[row * numCols + i] * B[i * numCols + col];

}

C[row * numCols + col] = result;

}

}

int main() {

// Allocate memory on the host for the input matrices

float *hostA = new float[numRows * numCols];

float *hostB = new float[numRows * numCols];

// Initialize the matrices with some values

for (int i = 0; i < numRows * numCols; i++) {

hostA[i] = (float)i;

hostB[i] = (float)i * 2;

}

// Allocate memory on the GPU

float *A, *B, *C;

cudaMalloc((void **)&A, numRows * numCols * sizeof(float));

cudaMalloc((void **)&B, numRows * numCols * sizeof(float));

cudaMalloc((void **)&C, numRows * numCols * sizeof(float));

// Copy data from host to device

cudaMemcpy(A, hostA, numRows * numCols * sizeof(float), cudaMemcpyHostToDevice);

cudaMemcpy(B, hostB, numRows * numCols * sizeof(float), cudaMemcpyHostToDevice);

// Define the grid and block dimensions for the kernel

dim3 gridDim(numRows / 32, numCols / 32);

dim3 blockDim(32, 32);

// Launch the matrixMult kernel on the GPU

matrixMult<<<gridDim, blockDim>>>(A, B, C, numRows, numCols);

// Copy the result from the device to the host

float *result = new float[numRows * numCols];

cudaMemcpy(result, C, numRows * numCols * sizeof(float), cudaMemcpyDeviceToHost);

// Clean up memory

delete [] hostA;

delete [] hostB;

delete [] result;

cudaFree(A);

cudaFree(B);

cudaFree(C);

return 0;

}Whats better?

What do you need?

Cuda architecture

The CUDA architecture is built upon the following components:

GPU: The CUDA architecture is designed to take advantage of the massive parallel processing power of NVIDIA GPUs. The GPU is the primary component of the architecture and performs the majority of the computational tasks.

Host: The host is the central processing unit (CPU) of the system, which interacts with the GPU and manages the memory and data transfers between the host and the GPU.

CUDA API: The CUDA API provides a high-level programming model for the GPU and includes a set of functions and libraries for accessing the GPU and performing computations on the GPU. The API supports a wide range of programming languages, including C, C++, Fortran, and Python.

CUDA Libraries: The CUDA libraries provide a set of pre-written functions for performing common computational tasks, such as matrix multiplication, convolution, and FFTs. These libraries help simplify the programming process and allow developers to focus on the logic of their algorithms.

CUDA Cores: CUDA cores are the processing units within the GPU that perform the computational tasks. Each GPU has hundreds or thousands of CUDA cores, and they work together in parallel to perform the computations.

CUDA Threads: A CUDA thread is a unit of execution that runs on a CUDA core. Multiple threads can run in parallel on the GPU, allowing the GPU to perform multiple tasks simultaneously.

CUDA Grids and Blocks: CUDA grids and blocks are used to organize and manage the execution of CUDA threads on the GPU. Grids are a collection of blocks, and blocks are a collection of threads. The number of grids, blocks, and threads can be specified by the programmer, allowing for fine-grained control over the execution of the computations.

The strong points of CUDA can be summarized as follows:

Abstraction from the hardware: The CUDA API provides a high-level programming model that abstracts the underlying hardware, making it easier for developers to program the GPU without having to worry about low-level details. This allows developers to focus on the logic of their algorithms and not have to worry about the underlying hardware.

Forward compatibility: CUDA code is forward compatible with future hardware, allowing developers to write code once and have it run on future NVIDIA GPUs without any modifications. This makes it easier for developers to take advantage of new hardware advancements without having to rewrite their code.

Automatic thread management: CUDA can handle hundreds of thousands of threads, making it easier for developers to scale their computations to take advantage of the parallel processing power of the GPU. The CUDA runtime automatically manages the creation and execution of threads, freeing up developers to focus on the logic of their algorithms.

Multithreading: CUDA provides transparent multithreading, which helps to hide latency and maximize the GPU utilization. This makes it easier for developers to parallelize their computations and take full advantage of the parallel processing power of the GPU.

Transparent for the programmer: The CUDA API is designed to be transparent for the programmer, making it easier for developers to program the GPU without having to worry about the underlying hardware or manage low-level details. This allows developers to focus on the logic of their algorithms and not have to worry about the underlying hardware.

Limited synchronization between threads: CUDA provides limited synchronization between threads, allowing for safe and efficient communication between threads. This makes it easier for developers to parallelize their computations and ensure that the results are accurate and consistent.

No message passing: CUDA does not use message passing between threads, making it less prone to deadlocks. This makes it easier for developers to parallelize their computations and ensure that the results are accurate and consistent.

Programmer effort in CUDA programming refers to the steps required to successfully program a GPU and take advantage of its parallel processing capabilities. There are several factors that need to be considered when programming a GPU using CUDA, and each of these factors affects the amount of effort required from the programmer.

Analyze algorithm for exposing parallelism: This refers to the process of identifying areas in the algorithm that can be executed in parallel. The goal is to break down the algorithm into smaller, independent tasks that can be executed simultaneously on the GPU. This is often done using pen and paper, where the programmer draws a diagram of the algorithm and identifies the parts that can be executed in parallel.

Block size: The block size refers to the number of threads that are grouped together and executed in parallel. The block size is a crucial factor in determining the performance of the GPU, as it affects the amount of data that can be processed simultaneously. The block size should be chosen based on the memory constraints of the GPU and the amount of data that needs to be processed.

Number of threads: The number of threads refers to the total number of parallel tasks that will be executed on the GPU. The number of threads should be chosen to ensure that the GPU is kept busy, with limited resources.

Global data set: The global data set refers to the data that is shared by all threads and is accessible from anywhere in the program. In order to achieve efficient data transfers, it is important to minimize the amount of data that needs to be transferred between the host and the GPU.

Local data set: The local data set refers to the data that is only accessible to a specific thread. This data is stored in the limited on-chip memory of the GPU and is used to store intermediate results during the execution of the algorithm.

Register space: The register space refers to the limited on-chip memory of the GPU that is used to store data during the execution of the algorithm. The register space is a scarce resource, and it is important to minimize its usage to ensure efficient performance of the GPU.

In conclusion, programming a GPU using CUDA requires careful consideration of several factors, including the algorithm, block size, number of threads, global data set, local data set, and register space. The goal is to keep the GPU busy, with limited resources, while ensuring efficient data transfers and minimizing the usage of limited on-chip memory.

Memory Hierarchy

The memory hierarchy in a GPU refers to the different types of memory available in the graphic card and how they are used to store and process data. There are three main types of memory in a GPU: global memory, shared memory, and registers.

Global Memory: Global memory is the largest type of memory available in a GPU, with typical sizes ranging from 4GB or more. Global memory is used to store data that is required by multiple threads and is accessible from anywhere in the program. Global memory is typically slower than the other types of memory in the GPU, with latency ranging from 400-600 cycles.

Shared Memory: Shared memory is a type of memory that is shared by multiple threads within a block. The size of shared memory is typically 16 KB or less. Shared memory is faster than global memory and is used to store data that is required by multiple threads within a block. This type of memory is used for thread collaboration, where threads within a block can communicate and exchange data.

Registers: Registers are a type of memory that is specific to a single thread. The size of registers is typically 16 KB or less. Registers are the fastest type of memory in the GPU and are used to store data that is specific to a single thread. Registers are used to store intermediate results during the execution of the algorithm and to store local variables.

Latency refers to the time it takes for a memory operation to complete. In the context of GPU memory, the latency of each type of memory affects the overall performance of the GPU.

Latency of Global Memory: The latency of global memory is typically 400-600 cycles, making it slower than the other types of memory in the GPU. The reason for this is that global memory is used to store data that is required by multiple threads, and accessing data from global memory requires more time compared to accessing data from other types of memory.

Latency of Shared Memory: Shared memory has a faster latency compared to global memory, making it suitable for storing data that is required by multiple threads within a block. The faster latency of shared memory enables threads within a block to collaborate and exchange data more efficiently.

Latency of Registers: Registers have the fastest latency of all the types of memory in the GPU. This is because registers are specific to a single thread and do not need to be shared between threads. The fast latency of registers makes them ideal for storing intermediate results during the execution of the algorithm and for storing local variables.

The purpose of each type of memory in the GPU is as follows:

Purpose of Global Memory: Global memory is used as IO for the grid. It is used to store data that is required by multiple threads and is accessible from anywhere in the program.

Purpose of Shared Memory: Shared memory is used for thread collaboration. It is used to store data that is required by multiple threads within a block, enabling threads within a block to communicate and exchange data.

Purpose of Registers: Registers are used for thread space. They are used to store data that is specific to a single thread, including intermediate results during the execution of the algorithm and local variables. The fast latency of registers makes them ideal for storing data that is required frequently by a single thread.

Thread hierarchy

The thread hierarchy in CUDA programming refers to the organization of threads into blocks and grids for efficient execution on a GPU. It is an important concept in CUDA programming and is essential for understanding how the GPU processes parallel tasks.

Kernels: Kernels are simple C programs that are executed by threads on the GPU. Each kernel is designed to perform a specific task and is executed by thousands of threads in parallel.

Threads: Each thread has its own unique ID, and is responsible for executing a portion of the kernel. The ID of each thread can be accessed within the kernel, allowing it to perform different tasks based on its ID.

Blocks: Threads are grouped into blocks, and each block contains a group of threads that can be executed in parallel. Threads within a block can also synchronize their execution, ensuring that they complete a specific task before moving on to the next.

Grid: Blocks are grouped into a grid, and each grid contains a set of blocks that can be executed in any order. This allows the GPU to execute blocks in parallel, increasing the overall performance of the GPU.

Example:

Suppose we have a kernel that calculates the square of a number. The kernel can be executed by thousands of threads, each with its own unique ID. The threads can be grouped into blocks, with each block containing 128 threads. The blocks can then be grouped into a grid, with each grid containing multiple blocks. The GPU will execute the kernel for each thread, and the result of each calculation will be stored in the global memory of the GPU.

In this example, the thread hierarchy allows us to take advantage of the parallel processing capabilities of the GPU, by executing the same kernel for thousands of threads in parallel. This results in a significant speedup compared to executing the same task on a CPU.

Workflow

The workflow for GPU programming typically involves several steps, as described below:

Memory allocation: The first step in the workflow is to allocate memory on the GPU. This involves reserving space in the GPU memory for the data that will be processed.

Memory copy: Host -> GPU: After memory has been allocated, the next step is to copy data from the host memory (the CPU) to the GPU memory. This step is important for preparing the data for processing on the GPU.

Kernel call: The next step is to call the GPU kernel, which is the code that will be executed on the GPU. The kernel specifies the computation to be performed on the data in the GPU memory.

Memory copy: GPU -> Host: After the kernel has been executed, the results are copied back from the GPU memory to the host memory. This step is necessary to retrieve the results of the computation for further processing on the CPU.

Free GPU memory: Finally, the GPU memory that was previously allocated is freed to avoid memory leaks. This step is important to ensure that the GPU memory is available for other tasks and to prevent any performance degradation due to memory constraints.