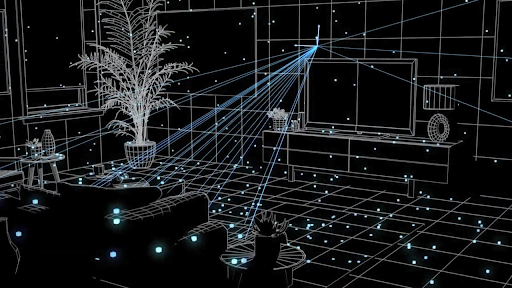

guru Visual Simultaneous Localization and Mapping (vSLAM) is a technology that enables robots and autonomous systems to understand their environment and navigate within it. This technology plays a crucial role in a wide range of applications, such as autonomous vehicles, augmented reality, robotics, and drone navigation. In this article, we will explore the basic concepts of Visual SLAM, its applications, and the latest advancements in the field.

Visual SLAM is a type of simultaneous localization and mapping (SLAM) that uses visual information from cameras and other sensors to create a map of the environment and to determine the position of the robot in that environment. It is an essential technology for robotic systems that operate in unknown or changing environments, such as in search and rescue missions, industrial inspection, and agriculture.

The basic idea behind Visual SLAM is to extract features from the images captured by the cameras and to use these features to determine the motion and position of the robot. This information is then used to create a map of the environment and to update the robot’s position in real-time. The creation of the map and the localization of the robot are achieved simultaneously, hence the name Simultaneous Localization and Mapping (SLAM).

Visual Simultaneous Localization and Mapping (vSLAM) is a technology for estimating the motion of sensors and reconstructing structures in unknown environments using visual information. The development of vSLAM has been a topic of research in various fields, including computer vision, augmented reality, and robotics, and has seen significant advances since 2010. The algorithms developed for vSLAM can be classified into three categories: feature-based, direct, and RGB-D camera-based.

The basic framework of vSLAM is composed of three modules:

1) Initialization

2) Tracking

3) Mapping.

1)Initialization

Definition: The process of setting up the initial state of the camera and the environment.

Methods: This can be done through various methods, including manual initialization, detection of planar structures, or estimation from motion.

Purpose: The purpose of initialization is to provide a starting point for the subsequent tracking and mapping processes.

2)Tracking

Definition: The process of estimating the camera pose (position and orientation) over time.

Methods: This can be done through various methods, including feature tracking, direct methods, or optical flow.

Purpose: The purpose of tracking is to provide a real-time estimate of the camera’s pose, which is used for mapping and to keep the map up-to-date.

3)Mapping

Definition: The process of building a map of the environment from the input obtained from the camera.

Methods: This can be done through various methods, including feature-based mapping, direct methods, or dense reconstruction.

Purpose: The purpose of mapping is to provide a representation of the environment that can be used for navigation, localization, or scene understanding.

In the Initialization stage, the global coordinate system is defined, and a part of the environment is reconstructed as an initial map. The Tracking stage continuously estimates the camera poses based on visual information, and the Mapping stage reconstructs the 3D structure of the environment. The key challenge in vSLAM is to accurately estimate camera poses and reconstruct the environment while working with limited visual input and limited computational resources.

The feature-based approach, which was one of the earliest methods of vslam, is based on tracking and mapping feature points in an image. This approachhas limitations in texture-less or feature-less environments. To addressthis issue, the direct approach was proposed, which does not detect featurepoints and instead uses the whole image for tracking and mapping. The rgb-dcamera-based approach uses both a monocular image and its depth, making itsuitable for low-cost rgb-d sensors such as microsoft kinect.

There are still technical challenges that need to be addressed in vslam, such as handling large-scale environments, robustness to changes in lighting conditions, and real-time performance on light-weighted hand-held devices. To evaluate the performance of vslam algorithms, various datasets have been developed, including benchmarking methodologies for comparing different algorithms.

Elements of vSLAM

Vslam (visual simultaneous localization and mapping) is a computer vision technique used in robotics and autonomous systems to determine the location and map of an environment in real-time. Here are the key elements of vslam in detail:

Camera and image processing: the primary source of information for vslam is a camera that captures images of the environment. The image data is then processed using computer vision algorithms to extract features such as corners, edges, and textures.

Feature Detection and Description: Feature detection algorithms are used to identify and extract keypoints in the images, which are then described using a descriptor. This helps in matching features between different images, which is important for tracking the camera’s position.

Pose Estimation: Pose estimation refers to the estimation of the camera’s orientation and position in the world. It is the process of estimating the transformation between the camera and the world coordinate frame based on the matching of features in different images.

Map Building: The vSLAM algorithm builds a map of the environment by creating a 3D representation of the world based on the camera’s pose estimation and the feature matching process. The map is represented as a graph of keyframes, with each keyframe representing a set of features and their positions in the world coordinate frame.

Loop Closure Detection: Loop closure detection is a critical component of vSLAM that helps detect when the camera has revisited a previously explored location. This helps to prevent drift and maintain the accuracy of the map over time.

Pose Graph Optimization: Pose graph optimization is the process of refining the estimated camera poses and the map representation. It uses non-linear optimization techniques to minimize the error between the estimated camera poses and the true positions of the features in the world.

Map Management and Representation: The map generated by vSLAM must be efficiently managed and stored for later use. The representation of the map depends on the application and can be in the form of a 2D or 3D point cloud, a voxel grid, or a combination of both.

These are the key elements of vSLAM, and a well-designed vSLAM system must consider each of these elements to achieve accurate and efficient performance.

Components of SLAM

The components of a Simultaneous Localization and Mapping (SLAM) system are:

Sensors: This includes cameras, laser rangefinders, IMUs, etc. to gather data about the environment.

Feature Detection and Description: This component is responsible for detecting and describing unique and distinctive features in the environment that can be used for mapping and localization.

Data Association: This component is responsible for associating measurements from the sensors with the map features.

State Estimation: This component uses the measurements from the sensors and the map features to estimate the pose of the robot in the environment.

Mapping: This component is responsible for building a map of the environment using the measurements from the sensors and the estimated pose of the robot.

Loop Closing: This component is responsible for detecting when the robot revisits a previously visited location and for updating the map accordingly.

Map Management: This component is responsible for storing, updating, and managing the map over time as new data is collected.

Motion Model: This component models the motion of the robot and predicts its future position.

Measurement Model: This component models the measurement process and predicts the expected measurements based on the robot’s pose and the map.

Optimization: This component uses various optimization algorithms to estimate the robot’s pose and the map parameters that maximize the likelihood of the observed measurements.

Components of VSLAM

The main components of a visual SLAM (Simultaneous Localization and Mapping) system are:

Camera model: A model of the camera’s intrinsic parameters (such as focal length, principal point, and distortion coefficients) and extrinsic parameters (such as position and orientation in the world).

Feature extraction: A process to identify and extract interesting and informative features from the images captured by the camera, such as corners or edges.

Feature tracking: A process to track the movement of the features from one image to the next to estimate the motion of the camera between frames.

Pose estimation: A process to estimate the position and orientation of the camera based on the motion of the features and the camera model.

Map representation: A representation of the environment, typically as a collection of 3D points, lines, or surface patches.

Data association: A process to determine which features in one frame correspond to features in another frame, so that their motion can be used to estimate the camera’s motion.

Loop closing: A process to detect when the camera has revisited a location it has previously been to and correct the accumulated error in the map and camera pose estimates.

Map optimization: A process to refine the map and camera pose estimates by minimizing the error between the observed and predicted feature positions.

Visual odometry: A process to estimate the camera’s motion between consecutive frames without the need for a pre-existing map.

Keyframe management: A process to decide when to add a new keyframe to the map, typically based on the camera’s motion, the density of features in the image, or the accuracy of the map.

Advantages of Visual SLAM:

Real-time operation: Visual SLAM can operate in real-time and provide accurate estimates of camera position and environment mapping.

Low computational requirements: Visual SLAM algorithms are designed to be computationally efficient and can run on a range of hardware, including smartphones and low-power embedded systems.

Robustness: Visual SLAM algorithms are designed to be robust to various types of environmental changes, such as lighting and texture changes, which makes them ideal for use in challenging environments.

Autonomous operation: Visual SLAM algorithms do not require any external assistance, making them ideal for use in autonomous applications.

Scalability: Visual SLAM algorithms can be easily extended to handle large-scale environments, making them suitable for use in a range of applications.

Versatility: Visual SLAM algorithms can be used with a variety of sensors, including monocular, stereo, and RGB-D cameras, making them suitable for a range of applications.

Reduced cost: Visual SLAM algorithms can be implemented using low-cost hardware, reducing the cost of deployment and maintenance.

Improved accuracy: Visual SLAM algorithms can provide high accuracy in camera pose estimation, making them ideal for applications that require precise tracking.

Easy to use: Visual SLAM algorithms are easy to implement and use, reducing the development time and cost for applications that require visual mapping and localization.

Improved safety: Visual SLAM algorithms can be used to improve safety in applications such as autonomous vehicles and drones by providing real-time environmental mapping and camera pose estimation.

Disadvantages of Visual SLAM:

Dependence on image quality: Visual SLAM algorithms can be affected by image quality, such as low lighting and textureless environments, which can impact their performance.

Limited field of view: Visual SLAM algorithms may have a limited field of view, which can impact their ability to accurately map large environments.

Increased power consumption: Visual SLAM algorithms can consume a significant amount of power, making them unsuitable for use in battery-powered applications.

Incomplete mapping: Visual SLAM algorithms may not be able to fully map an environment, resulting in gaps or missing information in the map.

Limited accuracy: Visual SLAM algorithms may not be accurate enough for applications that require precise mapping and localization.

Integration with other sensors: Visual SLAM algorithms may require integration with other sensors, such as LIDAR, to provide a complete picture of the environment.

Vulnerability to errors: Visual SLAM algorithms can be susceptible to errors, such as feature misdetection, which can impact their performance.

Requirement for training data: Visual SLAM algorithms may require training data to operate accurately, which can be time-consuming and resource-intensive.

Limited functionality: Visual SLAM algorithms may only provide limited functionality, such as camera pose estimation and mapping, making them unsuitable for applications that require more advanced features.

Limited generalization: Visual SLAM algorithms may not generalize well to new environments, which can impact their performance and limit their use in new applications.

Application of visual slam:

Visual SLAM (Simultaneous Localization and Mapping) has numerous applications in various fields including:

Robotics: Visual SLAM is widely used in autonomous robots for navigation and mapping of the environment. It helps the robots to estimate their position and create a map of their surroundings for navigation purposes.

Augmented Reality (AR) and Virtual Reality (VR): Visual SLAM algorithms can be used to provide real-time tracking of the user’s head and hand movements to enhance the AR and VR experience.

Drones and UAVs: Visual SLAM is commonly used in drones and unmanned aerial vehicles (UAVs) for navigation and mapping of large areas. It helps the drones to fly autonomously while avoiding obstacles and creating a map of the environment.

Automotive: Visual SLAM is used in advanced driver assistance systems (ADAS) for obstacle detection, lane departure warning, and autonomous driving.

Surveillance and security: Visual SLAM can be used in security and surveillance systems to detect and track moving objects in real-time.

Autonomous Navigation: Visual SLAM is used to create real-time maps and track the location of autonomous vehicles such as drones, robots, and self-driving cars. This allows the vehicles to navigate their environment without relying on GPS or external sensors.

Virtual Reality: Visual SLAM is also used in virtual reality applications. It allows virtual environments to be created based on real-world objects and environments, providing a more immersive experience.

Surveying: Visual SLAM can be used in surveying and mapping applications to create detailed maps of large environments such as mines, forests, and construction sites.

Industrial Inspection: Visual SLAM can be used in industrial inspection applications to create maps of industrial environments and track the position of inspection tools and cameras.

Agricultural Automation: Visual SLAM can be used in agriculture to create maps of farm fields and track the position of agricultural machinery. This allows for more efficient and accurate farming operations.

Gaming: Visual SLAM can be used in gaming applications to create more immersive and interactive gaming experiences.

Healthcare: Visual SLAM can be used in healthcare applications to create maps of medical environments such as hospitals and clinics, and to track the position of medical equipment and personnel.

Differce between slam & visual slam in table

| SLAM | Visual SLAM | |

|---|---|---|

| Definition | SLAM is a method to build a map of an unknown environment while simultaneously estimating the robot’s position within that environment. |

Visual SLAM is a type of SLAM that uses visual information from cameras and/or other sensors to build a map and estimate the robot’s position. |

| Input Data | SLAM algorithms can use data from a variety of sensors, including LIDAR, ultrasonic, and infrared sensors. |

Visual SLAM algorithms primarily use visual data from cameras. |

| Map Representation | SLAM algorithms can use various map representations, including grid maps, topological maps, and feature-based maps. |

Visual SLAM algorithms typically use feature-based maps. |

| Computational Complexity | SLAM algorithms can be computationally expensive, especially when using high-resolution maps or multiple sensors. |

Visual SLAM algorithms are often less computationally expensive than SLAM algorithms that use multiple sensors, but they can still be computationally intensive. |

| Accuracy | The accuracy of SLAM algorithms depends on the quality and resolution of the sensor data, as well as the algorithm’s implementation. |

The accuracy of Visual SLAM algorithms is often limited by the resolution and quality of the visual data, as well as the presence of visual features in the environment. |

| Robustness | SLAM algorithms can be robust in challenging environments, such as those with limited lighting or occlusions. |

Visual SLAM algorithms can be less robust in challenging environments, such as those with limited lighting or large occlusions. |

| Real-time Performance | SLAM algorithms can provide real-time performance, especially when using fast sensors and optimized algorithms. |

Visual SLAM algorithms can provide real-time performance, but this is often limited by the processing power of the computer and the number of features in the environment. |

| Applications | SLAM has a wide range of applications, including autonomous vehicles, robotics, and augmented reality. |

Visual SLAM has applications in augmented reality, robotics, and computer vision, among others. |

Difference between Vslam & Visual odometry

| Feature | Visual SLAM | Visual Odometry |

|---|---|---|

| Definition | Visual Simultaneous Localization and Mapping | Visual estimation of the motion of a camera without reference to an external source |

| Purpose | To build a map of the environment and determine the position of the camera within that map |

To estimate the relative motion of a camera within an environment |

| Map | Builds a map of the environment | No map of the environment is built |

| Scale | Can work on large scale environments | Works best on smaller scale environments |

| Accuracy | Generally more accurate than visual odometry | Generally less accurate than visual SLAM |

| Robustness | More robust to environmental changes | Less robust to environmental changes |

| Computational complexity | Higher computational complexity | Lower computational complexity |

| State estimation | Estimates both camera pose and map of the environment | Only estimates camera pose |

| Environment | Works well in known environments | Works well in unknown environments |